- Published at

Why DeepSeek Is Impressive

Discover DeepSeek and understand reasons behind its cost-efficient, high-performance.

Table of Contents

- Why DeepSeek Is Impressive

- 1. Unprecedented Cost Efficiency

- 2. Competitive Performance

- 3. Open-Source Commitment

- 4. Innovative Engineering and Research

- 5. Talent and Strategic Vision

- 6. Navigating Geopolitical Constraints

- 7. Rapid Market Impact

- 8. Broader Implications

- Caveats and Criticisms

- Conclusion

Why DeepSeek Is Impressive

1. Unprecedented Cost Efficiency

DeepSeek has challenged the notion that advanced AI models require massive financial investments. The company claims to have trained its DeepSeek-V3 model for approximately $6 million, a fraction of the $80–100 million spent on models like OpenAI’s GPT-4 or Meta’s Llama 3.1. This efficiency stems from:

- Optimized Hardware Use: DeepSeek utilized 2,048 Nvidia H800 chips, designed to comply with U.S. export controls, instead of the more expensive H100 chips.

- Mixture of Experts (MoE) Architecture: This approach activates only a subset of the model’s parameters (e.g., 37 billion out of 671 billion for DeepSeek-V3) for each task, significantly reducing computational overhead.

- Advanced Optimization Techniques: Innovations like multi-head latent attention (MHLA), mixed-precision computation, and the DualPipe algorithm for GPU communication enhance efficiency.

This cost-effectiveness democratizes AI development, enabling smaller organizations and researchers to access cutting-edge technology.

2. Competitive Performance

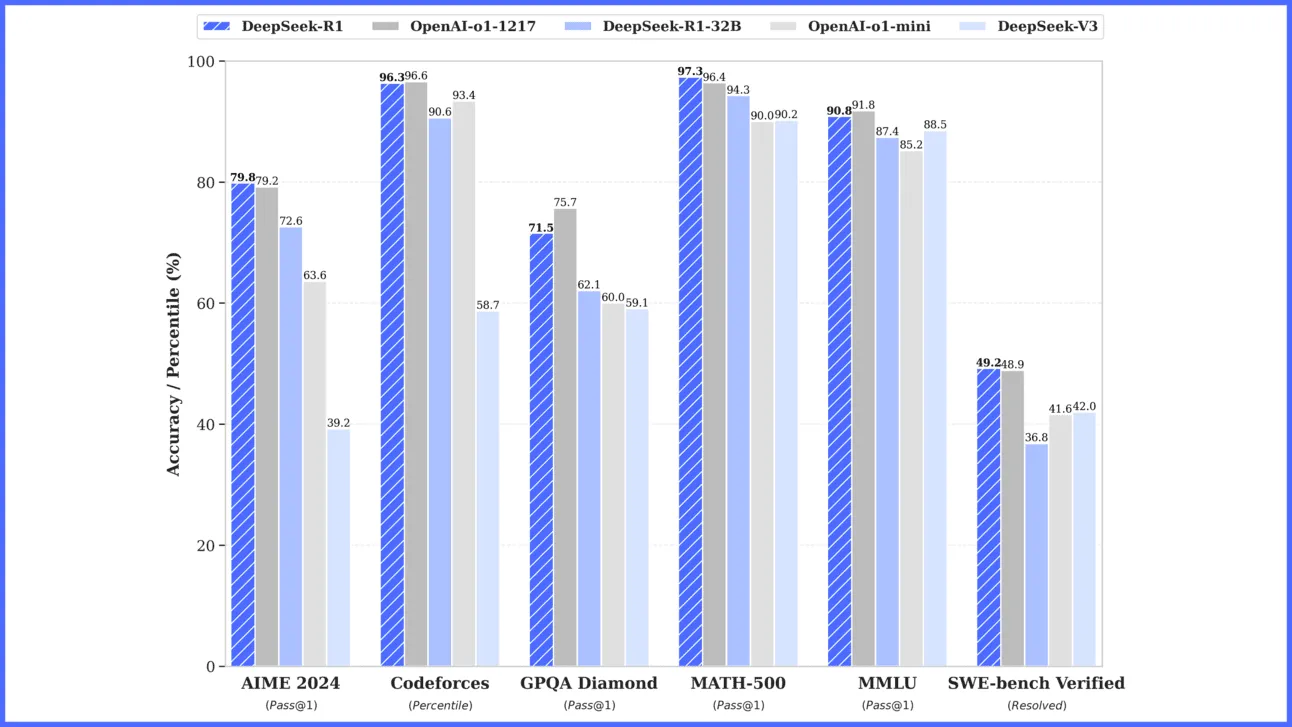

DeepSeek’s models, particularly DeepSeek-R1 and DeepSeek-V3, deliver performance comparable to or better than industry leaders like OpenAI’s o1 and Meta’s Llama:

- DeepSeek-R1: Released in January 2025, it matches or surpasses OpenAI’s o1 in mathematics, coding, and reasoning benchmarks, often at 20–50 times lower inference costs.

- DeepSeek-V3: With 671 billion parameters, it achieves top-tier results across various benchmarks while requiring significantly fewer resources.

These models leverage reinforcement learning, reward engineering, and distillation techniques to transfer capabilities from larger to smaller models, maintaining high performance with reduced computational needs.

3. Open-Source Commitment

Unlike proprietary models from OpenAI or Anthropic, DeepSeek releases its models, such as DeepSeek-R1, under the MIT License, making their weights and technical details freely available. This open-source approach:

- Fosters Global Innovation: Developers worldwide can inspect, modify, and build upon DeepSeek’s models, leading to over 700 open-source derivatives within days of R1’s release.

- Enhances Transparency: Public access to model architecture and training methods allows scrutiny for biases and ethical concerns, promoting responsible AI development.

- Challenges Industry Giants: By offering high-performance models for free, DeepSeek disrupts the monopoly of costly, closed-source systems.

4. Innovative Engineering and Research

DeepSeek’s rapid advancements are driven by a series of engineering breakthroughs:

- Multi-Token Processing: Unlike traditional models that process text token-by-token, DeepSeek’s “multi-token” system reads entire phrases, doubling speed while maintaining accuracy.

- Distillation and Synthetic Data: DeepSeek uses synthetic data generated by models like OpenAI’s o1 to train its systems, bypassing the need for vast human-created datasets.

- Efficient Training Strategies: Techniques like load balancing and auxiliary-loss-free approaches maximize computational efficiency during training.

These innovations demonstrate that smarter algorithms and optimization can rival brute-force scaling, shifting the AI paradigm from “bigger is better” to “smarter is better.”

5. Talent and Strategic Vision

DeepSeek’s success is underpinned by its unique team and leadership:

- Young, Skilled Workforce: The company recruits fresh graduates from top Chinese universities like Tsinghua and Peking, prioritizing talent over experience. This fosters a collaborative, innovative culture.

- Leadership by Liang Wenfeng: Founder and CEO, Liang, a former quantitative hedge fund manager, brings a data-driven, optimization-focused mindset to AI research. His decision to fund DeepSeek through High-Flyer allows long-term research without commercial pressures.

- Diverse Expertise: DeepSeek hires researchers from non-traditional fields like poetry and mathematics, broadening its models’ capabilities.

6. Navigating Geopolitical Constraints

Despite U.S. export controls on advanced AI chips since 2022, DeepSeek achieved its breakthroughs using a stockpile of Nvidia A100 chips acquired before the ban and less powerful H800 chips.

This ingenuity highlights China’s ability to innovate under restrictions, sending “shockwaves” through the industry and contributing to a $1 trillion stock market drop, including a $589 billion loss for Nvidia.

7. Rapid Market Impact

DeepSeek’s AI assistant, powered by DeepSeek-R1, became the top-rated free app on Apple’s U.S. App Store within days of its January 2025 release, overtaking ChatGPT.

Its accessibility via web, mobile, and API, combined with low inference costs, has driven widespread adoption by researchers, startups, and enterprises.

8. Broader Implications

DeepSeek’s achievements have far-reaching implications:

- Democratizing AI: Open-source models lower barriers for smaller entities, fostering a more diverse AI ecosystem.

- Sustainability: Efficient models reduce energy consumption, addressing concerns about AI’s environmental impact.

- Geopolitical Shift: DeepSeek’s success challenges U.S. dominance in AI, prompting debates about export controls and global competitiveness.

Caveats and Criticisms

While DeepSeek’s claims are impressive, some skepticism remains:

- Cost Transparency: Critics argue the $6 million figure reflects only the final training run, with total R&D costs likely higher, possibly exceeding $1 billion in chip investments.

- Security Concerns: A publicly accessible database exposed sensitive data shortly after R1’s launch, raising questions about DeepSeek’s cybersecurity practices.

- Data Ethics: OpenAI accused DeepSeek of using its o1 model’s data for training, though DeepSeek disclosed this in its research paper.

Conclusion

DeepSeek’s meteoric rise is a testament to its innovative engineering, cost-efficient strategies, and commitment to open-source AI.

By achieving performance on par with industry giants at a fraction of the cost, it has redefined the AI landscape, challenging established players and democratizing access to advanced technology.

While questions about total costs and security persist, DeepSeek’s breakthroughs signal a new era of AI development driven by efficiency, transparency, and global collaboration.

As Silicon Valley and policymakers grapple with its implications, DeepSeek remains a beacon of what’s possible when innovation meets ambition.